Dive-in:

The majority of Python script tools that execute successfully on your computer will publish and execute successfully on a GIS Server—you do not have to modify your script in any way. However, if you are encountering problems it may be due to your script using a lot of project data, or using import statements to import Python modules you developed. In this case, you may find this topic helpful as it delves into the following details:.

- How project data used in a script is found and made available on the server for task execution.

- How imported modules are found and made available on the server for task execution.

- How to make project data and Python modules parameters to your scripts.

- How tool validation code is handled and its interaction between the client and the server.

- How third-party libraries are handled.

If you are unfamiliar with Python, ArcPy, or script tools, skip to the Getting started with Python, ArcPy, and script tools section below for a list of useful topics.

How project data in your script is found

Whenever you share a result, either as a package or as a service, and the result references a script tool, the script tool is scanned to discover any project data used in the script. When project data is found, it is consolidated into a temporary folder that is either packaged (if you are sharing a package) or uploaded to the server (if you are sharing a service).

When your script is scanned, every quoted string (either single- or double-quotes) used in a Python variable or as an argument to a function is tested to see if it is a path to data that exists. Data, in this case, means

- A layer in the table of contents (ArcMap or ArcGlobe)

- A folder

- A file

- A geodataset, such as a feature class, shapefile, geodatabase, map document (.mxd), and layer file (.lyr)

For the purposes of discussion, only data that is used as input to geoprocessing tools or paths referencing other Python modules is of interest. Output data is also consolidated, but it's not considered to be project data.

Whenever a quoted string is found in the script, the test for data existence proceeds as follows:

- Does the string refer to a layer in the table of contents?

- Does the string contain an absolute path to data (such as "e:\Warehousing\ToolData\SanFrancisco.gdb\streets")?

- Does the string reference data that can be found relative to the script location? The script location is defined as follows:

- The folder containing the script.

- If the script is embedded in the toolbox, the location is the folder containing the toolbox.

- If the script is in a Python toolbox, the location is the folder containing the Python toolbox.

These tests proceed in sequential order. If the test passes, and the data exists, it will be consolidated, with one exception: if you are sharing a service, the server's data store is examined to determine if the data resides in the data store. If it resides in the data store, then it is not consolidated.

Note:

When folders are consolidated, only files and geodatasets within the folder are copied; no subfolders are copied. Some geodatasets, such as file geodatabases, rasters, and TINS are technically folders, but they are also geodatasets, so they will be copied. If the folder contains layer files (.lyr) or map documents (.mxd), all data referenced by the layer file or map document is also consolidated so that any arcpy.mapping routines in the script can gain access to the referenced data.

Tip:

Due to the way folders are consolidated, you should avoid cluttering the folder with large datasets and files that will never be used by your tool; it unnecessarily increases the size of the data to be packaged or uploaded to the server. (This does not apply to folders found in a server's data store as these folders are not uploaded to the server.)

Examples

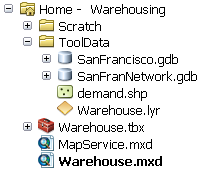

The examples below are based on this folder structure:

Relative paths to data sets

The following technique of finding data relative to the location of the script is a common pattern, especially for services created for ArcGIS 10.0. The ToolData folder contains SanFrancisco.gdb. Within SanFrancisco.gdb is a feature class named Streets. In the code sample below, the path to the ToolData folder and the datasets inside are constructed relative to the location of the script (the same directory as the Warehouse.tbx).

import arcpy

import os

import sys

# Get the directory the script lives in.

# Folders and data will be found relative to this location.

#

scriptPath = sys.path[0]

# Construct paths to ../ToolData/SanFrancisco.gdb/Streets and

# ../ToolData/Warehouse.lyr

streetFeatures = os.path.join(scriptPath, "ToolData\\SanFrancisco.gdb\\Streets")

streetLyr = os.path.join(scriptPath, "ToolData\\Warehouse.lyr")

In the code above, the variables streetFeatures and streetLyr will be tested to see if they reference data that exists. These datasets will be consolidated and uploaded to the server (unless the folder they reside in has been referenced as part of the server's data store).

Relative paths to folders

The ToolData folder itself can be referenced and used as a starting point to reference items. In the code sample below, the path to the ToolData folder is constructed relative to the location of the script (the same directory as the Warehouse.tbx).

import arcpy

import os

import sys

# Get the directory the script lives in.

# Folders and data will be found relative to this location.

#

scriptPath = sys.path[0]

# Construct paths to the ToolData folder

toolDataFolder = os.path.join(scriptPath, "ToolData")

# Construct path to items inside the folder

streetFeatures = os.path.join(toolDataFolder, "SanFrancisco.gdb\\Streets")

In the code above, the variable toolDataFolder becomes a relative path to a folder of various items that can be referenced throughout your Python script. This ToolData folder will be consolidated—all its contents (with the exception of subfolders as noted above) will be packaged or uploaded to the server (unless the ToolData folder is part of the server's data store).

Note that folder contents are copied, not individual files when referencing a folder. For example, in the above code, despite the fact no explicit path to the Warehouse.lyr has been created, the file will be consolidated as it exists in the referenced folder.

Absolute path to a geodataset

An absolute path is one that begins with a drive letter, such as e:/, as shown in the code sample below. import arcpy

import os

streetFeatures = 'e:/Warehousing/ToolData/SanFrancisco.gdb/Streets'

In the above code, the Streets dataset, and all other data it depends upon (such as relationship classes and domains), will be consolidated.

Hybrid example

import arcpy

import os

toolDataPath = r'e:\Warehousing\ToolData'

warehouseLyr = os.path.join(toolDataPath, "Warehouse.lyr")

In the above code, the entire contents of the ToolData folder is consolidated. Since the folder contents (minus subfolders) is consolidated, Warehouse.lyr will be consolidated as well, along with any data referenced by Warehouse.lyr.

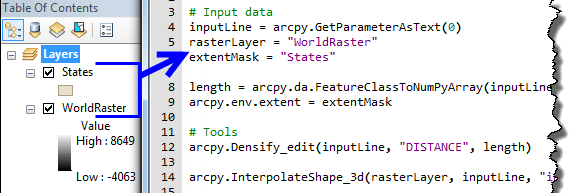

Referencing layers as project data

A not as common workflow of using layers as project data can result in significant performance improvements for your Python script tool. The above Python snippets use full paths to feature classes and layer files. When a geoprocessing service is executed, it must first open the dataset, and opening a dataset is a performance hit. Using layers in your script keep the data opened and cached for faster execution. The following image shows how layers within the ArcMap Table of Contents are matched up and used within the Python script.

The two layers from the Table of Contents are used inside the script tool. The variables point to simple strings which match the layer names inside the map document. When publishing this workflow to ArcGIS Server, the data will be consolidated and moved to the server (if not referenced in the data store) and the service will hold a reference to the layers in memory. Within that service the tool will find and use layers through this name match.

Note:

When using layers as internal project data to a script tool, the script tool becomes dependent on the associated map document. You cannot execute the tool in ArcCatalog or from another map document without those layers present. This pattern will not work with Background Processing and reduces the general portability of the workflow. As such, this pattern is mostly suited to creating geoprocessing services.

Forward versus backward slashes

The Windows convention is to use a backward slash (\) as the separator in a path. UNIX systems use a forward slash (/).

Note:

Throughout ArcGIS, it doesn't matter whether you use a forward or backward slash in your path—ArcGIS will always translate forward and backward slashes to the appropriate operating system convention.

Backward slash in scripting

Programming languages that have their roots in UNIX and the C programming language, such as Python, treat the backslash (\) as the escape character. For example, \t signifies a tab. Since paths can contain backslashes, you need to prevent backslashes from being used as the escape character. The easiest way is to convert paths into Python raw strings using the r directive, as shown below. This instructs Python to ignore backslashes.

thePath = r"E:\data\telluride\newdata.gdb\slopes"Importing other Python modules

Your script may import other scripts that you developed. For example, the code below shows importing a Python module named myutils.py, which is found in the same directory as the parent script and contains a routine named getFIDName.import arcpy

import myutils

inFeatures = arcpy.GetParameterAsText(0)

inFID = myutils.getFIDName(inFeatures)

Whenever an import statement is encountered, the following order is used to locate the script:

- The same folder as the script. If the script is embedded in the toolbox, the folder containing the toolbox is used.

- The folder referenced by the system's PYTHONPATH variable.

- Any folder referenced by the system's PATH variable.

Another technique for referencing modules to import is to use the sys.path.append method. This allows you to set a path to a folder containing scripts that you need to import.import arcpy

import sys

import os

# Append the path to the utility modules to the system path

# for the duration of this script.

#

myPythonModules = r'e:\Warehousing\Scripts'

sys.path.append(myPythonModules)

import myutils # a Python file within myPythonModules

In the above code, note that the sys.path.append method requires a folder as an argument. Since r'e:\Warehousing\Scripts' is a folder, the entire contents of the folder will be consolidated. The rules for copying folder contents apply here as well—everything in the folder is copied except for subfolders that are not geodatasets.

Note:

Python scripts within the folder are not scanned for project data or imported modules.

Tool validation code

If you have experience writing script tools, you may be providing your own tool validation logic. Clients of geoprocessing service do not have the capability to execute your tool validation logic—only the server has this capability. When the client sends its execute task request to the service, your validation logic will execute on the server. If your validation routines throw an error, the task will stop execution. If you're returning messages from your service, the client will receive messages thrown by your validation routines.

Validation logic is implemented with Python, and your validation code will be scanned for project data and modules, just like any other Python script. For example, your validation logic may open a folder (for example, d:\approved_projections) containing projection files (.prj) to build a choice list of spatial references the client can use when they execute your tool. This folder is not a tool parameter; it's project data used within your tool validation script. The same rules described above for Python scripts apply here, and the consequence is that the d:\approved_projections folder will be consolidated and copied to the server (unless it's found in the server's data store).

Making project data and modules tool parameters

As described above, your Python scripts are scanned when shared, and decisions are made about what data your script uses based on quoted strings found in your code. If you want to take control of this process, you can employ the practice of creating parameters for all data and modules used by your script. The script sample below shows the idea—all project data and modules are made into parameters that are passed into the script.

import arcpy

import sys

import os

inFeatures = arcpy.GetParameterAsText(0) # Feature Layer

projectDataFolder = arcpy.GetParameterAsText(1) # Folder

myPythonModules = arcpy.GetParameterAsText(2) # Folder

# Append the path to the utility modules to the system path

# for the duration of this script.

#

sys.path.append(myPythonModules)

import myutils # a Python file within myPythonModules

# Construct a variable to hold the Streets feature class found in the

# project data folder

#

streetsFeatures = os.path.join(projectDataFolder, "SanFrancisco.gdb", "Streets")When your script uses parameters for all its data as shown above, several good things happen:

- You are more aware of the data your script needs—because it's a parameter, it's there for you to see on the tool dialog box. You don't have to edit your code to determine what data you're using.

- Internal tool validation logic takes over—if the parameter value references data that doesn't exist, the tool dialog box will display an error, and you cannot execute the tool to create a result.

- To reset the location of the data, you can browse for the location using the tool dialog box rather than typing the location in your script (which is error-prone).

- When the result is shared as a service, the Service Editor will set folder parameters to an Input mode of Constant; your client never sees the parameters. When you publish, the two folders will be copied to the server (unless they are registered with the data store). When your task executes on the server, your script will receive the paths to the copied folders.

Third-party modules

Third-party modules (any module that is not part of the core Python installation) are not consolidated. You need to ensure the module exists and runs correctly on the server. This does not apply to the numpy or matplotlib modules which are installed with ArcGIS Server.

Note:

Installing third-party Python modules (other than numpy and matplotlib) on Linux requires special handling.

Learn more about installing third-party Python modules on Linux

Getting started with Python, ArcPy, and script tools

If you are unfamiliar with Python, ArcPy, and script tools, the table below lists a few topics that will help you get started.

| Help topic | Contents |

|---|---|

Basic concepts of creating your own geoprocessing tools. | |

Introductory topics to Python and ArcPy. These topics lead you to more detailed topics about Python and the ArcPy site package. | |

Introductory topic on creating custom script tools using Python. | |

Once you've become familiar with the process of creating a script tool, this topic is referred to often as it explains in detail how to define script tool parameters. |